Last week at Microsoft Build the Power BI team announced Datamarts for Power BI. (Main blog post, Mechanics demo video)

After spending some of my weekend playing with these features I thought I would share my first impressions.

What has been released?

In summary:

- A special dataflow that deposits data into an…

- automatically provisioned Azure SQL database…

- which is then used as the data source for an automatically created DirectQuery dataset.

- All the above is editable via a single web UI and treated as one package.

How does it work?

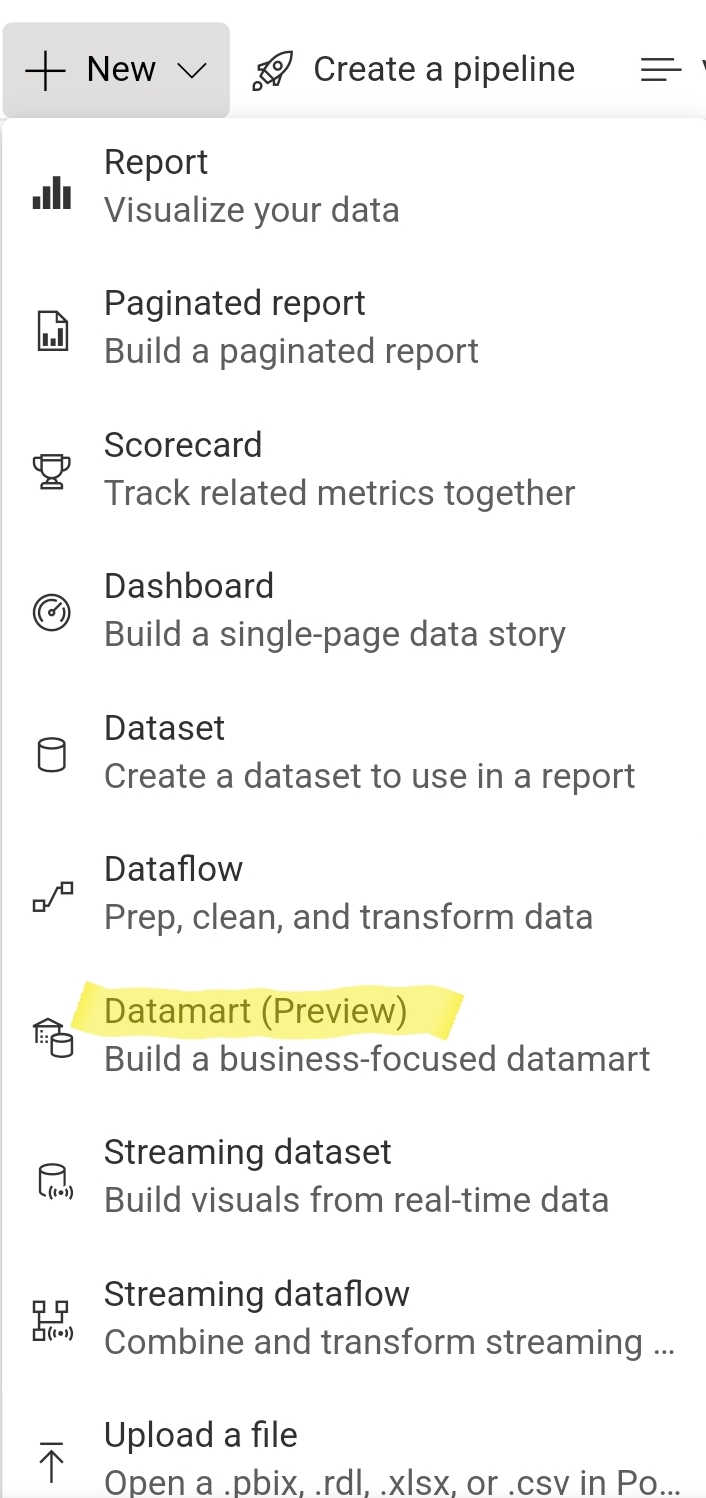

When you log onto app.powerbi.com and go into a premium workspace (premium capacity or per user), you’ll see the ability to create a new Datamart.

This will open a new web experience where you then need to create a special kind of Power Query dataflow, except this time your dataflow will deposit the data into an automatically provisioned Azure SQL database (an elastic general purpose database). Also, your dataflow will only be editable within the new Datamart UI and will not be treated as a regular dataflow… Except that it very much looks like a regular dataflow but now it deposits the data into an Azure SQL database instead of a datalake (CDM format).

On top of this, a read-only (and “locked down”) DirectQuery dataset will be automatically provisioned that is based on the data inside the Azure SQL database. It is locked into sharing the same name as the Datamart. The dataset can also only be edited via the Datamart web UI (you cannot connect to the Datamart’s auto-DQ dataset via external tools such as Tabular Editor or SQL Server Management Studio for editing).

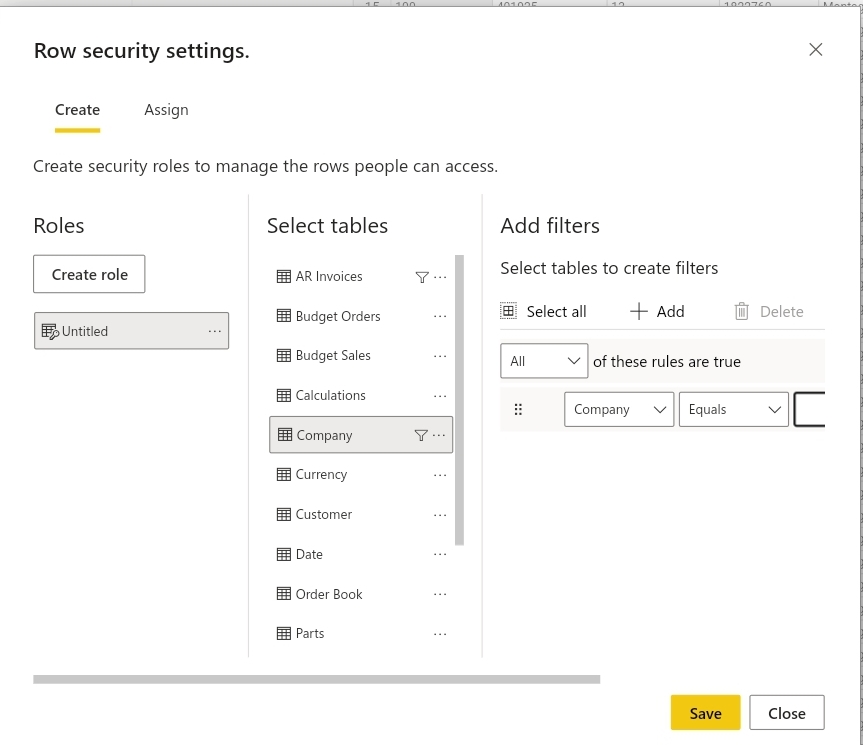

The reason for the locked down approach is likely because from the Datamart web UI you can create roles that in turn will create roles for both the auto-DQ dataset as well as database roles on the Azure SQL DB.

Measures and relationships are also able to be created in the web UI, which reminds me almost of the Azure Analysis Services Web designer UI before it got shut down.

On top of that, there’s also a simple interface for running SQL queries and “visual” queries against the database.

So why are Power BI Datamarts such a big deal?

The big draw card in all of this is really the creation and automatic provision of Azure SQL databases, as well as being able to easily load them from Power Query.

The other items (in my opinion) are nice to have but are also use-case specific:

- The web UI for data modelling is nice for lowering the barrier for newcomers but I’m unlikely to use it (creating measures and managing the data model will always be more efficient in Tabular Editor).

- The auto-DQ dataset is interesting, but it steers people away from import mode and taking full advantage of the incredible Vertipaq engine, probably Power BI’s strongest selling point.

Why is being able to create and load data into an Azure SQL DB so hyped?

- It let’s you seperate the query logic layer from the data model layer.

- It allows for other tools to analyze the data besides Power BI (e.g. any tool that uses SQL)

- It gives you a good place to store additional / infrequent information, such as budgets, forecasts, and other supplemental data.

But above all, it cuts through several barriers that users face when they want to set up a reporting solution. Instead of having to get access to an Azure subscription to set up an Azure Data Factory resource and Azure SQL DB resource, you can now just do it with the click of a button. For business users trying to come up with robust, supportable solutions, this is an absolute godsend.

For internal IT departments it may seem daunting that users are creating their own Azure SQL databases, but in most cases this may be being done anyway by more advanced users, either through local SQL installs at the Business unit level, Access databases, inefficient SharePoint lists, or a network of Excel files as “databases”.

IT departments should keep an eye on overall deployments, resource consumption, and data sensitivity, but for the most part this change is just another in a long process of IT departments transitioning away from being data gatekeepers towards being data gardeners (by helping the business “grow” their analytics capabilities).

Why is loading from a dataflow into an Azure SQL DB better than into a datalake?

Both contain type information, both load quickly, both can be connected to via Power BI Desktop, however there are some differences:

Loading a dataflow into their standard datalake is much “cheaper” storage as it’s just blob storage (CSVs + some json metadata files).

Loading a dataflow into an Azure SQL DB allows you to assign roles and row level security, as well as allows for simple connections from a variety of tools, (Tabular Editor provider datasource, SSMS, Python, and anything else that can connect to a SQL database).

Rough Edges and improvements to be made

With all the benefits so far about this new package of features, there are still some improvements that could be made:

- It is very much a package of features. If you didn’t want your DirectQuery dataset, too bad you’ve now got one. Don’t want to use the web UI and would rather use Tabular Editor? Not possible.

- When first creating the Datamart you don’t input a name, instead it gets given an autogenerated name that then needs to be renamed afterwards.

- When you go to create a dataflow it asks if instead you would like to create a Datamart, when they’re not really synonymous. Instead it would make more sense that when you make a dataflow you can choose whether to load the data into a datalake or into a database. Support this idea here.

- People have reported different experiences, but trying to build an “import” dataset off of the Azure SQL DB was not workable for me. The main issue I ran into no matter what method I used was that the Azure SQL DB appeared to throttle my queries hard. Very occasionally I could load a 200k row table, but most times it would stall at 50k and then time out after several hours. To me this is an absolute deal breaker and removes an incentive to use the feature, so hopefully as Datamarts goes through preview these kinds of issues get ironed out.

Final thoughts

I look forward to when the Azure SQL DB can handle me querying all of its data into my import model. Once this is possible it will be an absolute game changer.

I’m not convinced about the forcing of DirectQuery onto us or the requirements to use the web UI. Hopefully this will get removed and split out.

The story of dataflows, Datamarts, and people comparing them is confusing. To simplify this story I really think the Power BI team should position dataflows as a tool to transfer data from source systems into a storage destination (whether that be datalake storage or relational database storage).

Allowing dataflows to choose their destination simplifies the story for users:

— James Fancke (@jdfancke) May 29, 2022

- a dataflow is a way to get data from source systems into a storage destination

- you can pick either basic datalake storage (CSVs+manifest/typing) or richer relational database storage (Azure SQL DB)

Finally, I read a decent blog post by Mim that also has more specific detail behind Datamarts and recommend giving it a read.